الفيزياء الكلاسيكية

الفيزياء الكلاسيكية

الكهربائية والمغناطيسية

الكهربائية والمغناطيسية

علم البصريات

علم البصريات

الفيزياء الحديثة

الفيزياء الحديثة

النظرية النسبية

النظرية النسبية

الفيزياء النووية

الفيزياء النووية

فيزياء الحالة الصلبة

فيزياء الحالة الصلبة

الليزر

الليزر

علم الفلك

علم الفلك

المجموعة الشمسية

المجموعة الشمسية

الطاقة البديلة

الطاقة البديلة

الفيزياء والعلوم الأخرى

الفيزياء والعلوم الأخرى

مواضيع عامة في الفيزياء

مواضيع عامة في الفيزياء|

Read More

Date: 8-8-2016

Date: 9-8-2016

Date: 30-8-2016

|

Entropy Form

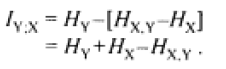

The last quantity on the right, conditional entropy HY|X, as HX,Y-HX. Substituting that difference in place of HY|X in :

(1)

(1)

In this form, mutual information is the sum of the two self-entropies minus the joint entropy.

We used the mutually associated case to derive Equation 1. However, Equation 1 also applies to the mutually unassociated case. For that case, mutual information turns out to be zero. To see that, we use the equation for the unassociated case. Using that definition, we substitute HX+HY in place of HX,Y in Equation 1. That gives IY;X=HY+HX- (HX+HY), or IY;X=0. So, the mutual information of two independent systems is zero. In other words, one system tells us nothing about the other. Incidentally, rearranging Equation 1 provides an alternate expression for joint entropy, HX,Y:

HX,Y = HY + HX-IY;X....... (2)

Joint entropy for two systems or dimensions, whether mutually related or not, therefore is the sum of the two self-entropies minus the mutual information. (For two unrelated systems, mutual information IY;X is zero. Eq. 2 then reduces, HX,Y=HY+HX.)

|

|

|

|

للعاملين في الليل.. حيلة صحية تجنبكم خطر هذا النوع من العمل

|

|

|

|

|

|

|

"ناسا" تحتفي برائد الفضاء السوفياتي يوري غاغارين

|

|

|

|

|

|

|

بمناسبة مرور 40 يومًا على رحيله الهيأة العليا لإحياء التراث تعقد ندوة ثقافية لاستذكار العلامة المحقق السيد محمد رضا الجلالي

|

|

|