تاريخ الرياضيات

الاعداد و نظريتها

تاريخ التحليل

تار يخ الجبر

الهندسة و التبلوجي

الرياضيات في الحضارات المختلفة

العربية

اليونانية

البابلية

الصينية

المايا

المصرية

الهندية

الرياضيات المتقطعة

المنطق

اسس الرياضيات

فلسفة الرياضيات

مواضيع عامة في المنطق

الجبر

الجبر الخطي

الجبر المجرد

الجبر البولياني

مواضيع عامة في الجبر

الضبابية

نظرية المجموعات

نظرية الزمر

نظرية الحلقات والحقول

نظرية الاعداد

نظرية الفئات

حساب المتجهات

المتتاليات-المتسلسلات

المصفوفات و نظريتها

المثلثات

الهندسة

الهندسة المستوية

الهندسة غير المستوية

مواضيع عامة في الهندسة

التفاضل و التكامل

المعادلات التفاضلية و التكاملية

معادلات تفاضلية

معادلات تكاملية

مواضيع عامة في المعادلات

التحليل

التحليل العددي

التحليل العقدي

التحليل الدالي

مواضيع عامة في التحليل

التحليل الحقيقي

التبلوجيا

نظرية الالعاب

الاحتمالات و الاحصاء

نظرية التحكم

بحوث العمليات

نظرية الكم

الشفرات

الرياضيات التطبيقية

نظريات ومبرهنات

علماء الرياضيات

500AD

500-1499

1000to1499

1500to1599

1600to1649

1650to1699

1700to1749

1750to1779

1780to1799

1800to1819

1820to1829

1830to1839

1840to1849

1850to1859

1860to1864

1865to1869

1870to1874

1875to1879

1880to1884

1885to1889

1890to1894

1895to1899

1900to1904

1905to1909

1910to1914

1915to1919

1920to1924

1925to1929

1930to1939

1940to the present

علماء الرياضيات

الرياضيات في العلوم الاخرى

بحوث و اطاريح جامعية

هل تعلم

طرائق التدريس

الرياضيات العامة

نظرية البيان

CONTROLLABILITY, BANG-BANG PRINCIPLE-OBSERVABILITY

المؤلف:

Lawrence C. Evans

المصدر:

An Introduction to Mathematical Optimal Control Theory

الجزء والصفحة:

24-26

6-10-2016

1497

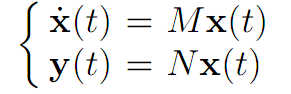

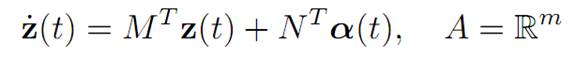

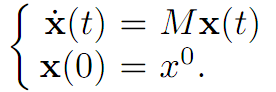

We again consider the linear system of ODE

where M ∈ Mn×n.

In this section we address the observability problem, modeled as follows. We suppose that we can observe

(O) y(t) := Nx(t) (t ≥ 0),

for a given matrix N ∈ Mm×n. Consequently, y(t) ∈ Rm. The interesting situation is when m << n and we interpret y(.) as low-dimensional “observations” or “measurements” of the high-dimensional dynamics x(.).

OBSERVABILITY QUESTION: Given the observations y(.), can we in principle reconstruct x(.)? In particular, do observations of y(.) provide enough information for us to deduce the initial value x0 for (ODE)?

DEFINITION. The pair (ODE),(O) is called observable if the knowledge of y(.) on any time interval [0, t] allows us to compute x0.

More precisely, (ODE),(O) is observable if for all solutions x1(.), x2(.), Nx1(.) ≡Nx2(.) on a time interval [0, t] implies x1(0) = x2(0).

TWO SIMPLE EXAMPLES. (i) If N ≡ 0, then clearly the system is not observable.

(ii) On the other hand, if m = n and N is invertible, then clearly x(t) = N−1y(t)

is observable.

The interesting cases lie between these extremes.

THEOREM 1.1 (OBSERVABILITY AND CONTROLLABILITY). The system

(1.11)

(1.11)

is observable if and only if the system

(1.12)

(1.12)

is controllable, meaning that C = Rn.

INTERPRETATION. This theorem asserts that somehow “observability and controllability are dual concepts” for linear systems.

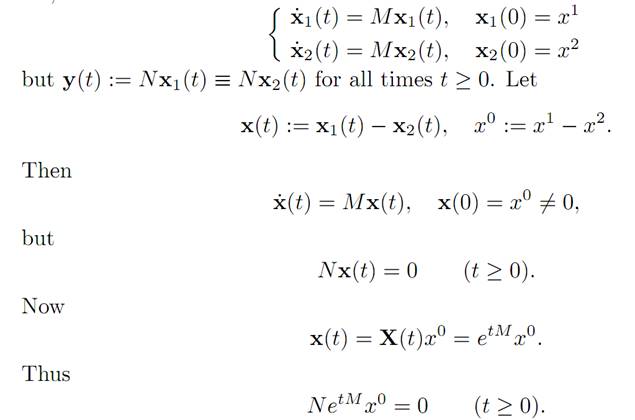

Proof. 1. Suppose (1.11) is not observable. Then there exist points x1 ≠ x2 ∈Rn, such that

Let t = 0, to find Nx0 = 0. Then differentiate this expression k times in t and let t = 0, to discover as well that

NMkx0= 0

for k = 0, 1, 2, . . . . Hence (x0)T (Mk)TNT = 0, and hence (x0)T (MT )kNT = 0.

This implies

(x0) T [NT ,MTNT , . . . , (MT ) n−1NT ] = 0.

Since x0 = 0, rank[NT , . . . , (MT )n−1NT ] < n. Thus problem (1.12) is not controllable. Consequently, (1.12) controllable implies (1.11) is observable.

2. Assume now (1.12) not controllable. Then rank[NT , . . . , (MT )n−1NT ] < n, and consequently according to Theorem 2.3 there exists x0 = 0 such that

(x0) T [NT , . . . , (MT ) n−1NT ] = 0.

That is, NMkx0 = 0 for all k = 0, 1, 2, . . . , n − 1.

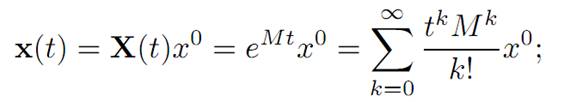

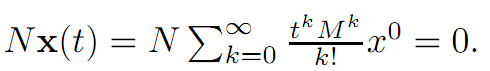

We want to show that y(t) = Nx(t) ≡ 0, where

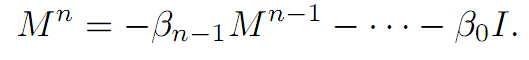

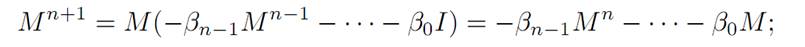

According to the Cayley–Hamilton Theorem, we can write

for appropriate constants. Consequently NMnx0 = 0. Likewise,

and so NMn+1x0 = 0. Similarly, NMkx0 = 0 for all k.

Now

and therefore

We have shown that if (1.12) is not controllable, then (1.11) is not observable.

References

[B-CD] M. Bardi and I. Capuzzo-Dolcetta, Optimal Control and Viscosity Solutions of Hamilton-Jacobi-Bellman Equations, Birkhauser, 1997.

[B-J] N. Barron and R. Jensen, The Pontryagin maximum principle from dynamic programming and viscosity solutions to first-order partial differential equations, Transactions AMS 298 (1986), 635–641.

[C1] F. Clarke, Optimization and Nonsmooth Analysis, Wiley-Interscience, 1983.

[C2] F. Clarke, Methods of Dynamic and Nonsmooth Optimization, CBMS-NSF Regional Conference Series in Applied Mathematics, SIAM, 1989.

[Cr] B. D. Craven, Control and Optimization, Chapman & Hall, 1995.

[E] L. C. Evans, An Introduction to Stochastic Differential Equations, lecture notes avail-able at http://math.berkeley.edu/˜ evans/SDE.course.pdf.

[F-R] W. Fleming and R. Rishel, Deterministic and Stochastic Optimal Control, Springer, 1975.

[F-S] W. Fleming and M. Soner, Controlled Markov Processes and Viscosity Solutions, Springer, 1993.

[H] L. Hocking, Optimal Control: An Introduction to the Theory with Applications, OxfordUniversity Press, 1991.

[I] R. Isaacs, Differential Games: A mathematical theory with applications to warfare and pursuit, control and optimization, Wiley, 1965 (reprinted by Dover in 1999).

[K] G. Knowles, An Introduction to Applied Optimal Control, Academic Press, 1981.

[Kr] N. V. Krylov, Controlled Diffusion Processes, Springer, 1980.

[L-M] E. B. Lee and L. Markus, Foundations of Optimal Control Theory, Wiley, 1967.

[L] J. Lewin, Differential Games: Theory and methods for solving game problems with singular surfaces, Springer, 1994.

[M-S] J. Macki and A. Strauss, Introduction to Optimal Control Theory, Springer, 1982.

[O] B. K. Oksendal, Stochastic Differential Equations: An Introduction with Applications, 4th ed., Springer, 1995.

[O-W] G. Oster and E. O. Wilson, Caste and Ecology in Social Insects, Princeton UniversityPress.

[P-B-G-M] L. S. Pontryagin, V. G. Boltyanski, R. S. Gamkrelidze and E. F. Mishchenko, The Mathematical Theory of Optimal Processes, Interscience, 1962.

[T] William J. Terrell, Some fundamental control theory I: Controllability, observability, and duality, American Math Monthly 106 (1999), 705–719.

الاكثر قراءة في نظرية التحكم

الاكثر قراءة في نظرية التحكم

اخر الاخبار

اخر الاخبار

اخبار العتبة العباسية المقدسة

الآخبار الصحية

قسم الشؤون الفكرية يصدر كتاباً يوثق تاريخ السدانة في العتبة العباسية المقدسة

قسم الشؤون الفكرية يصدر كتاباً يوثق تاريخ السدانة في العتبة العباسية المقدسة "المهمة".. إصدار قصصي يوثّق القصص الفائزة في مسابقة فتوى الدفاع المقدسة للقصة القصيرة

"المهمة".. إصدار قصصي يوثّق القصص الفائزة في مسابقة فتوى الدفاع المقدسة للقصة القصيرة (نوافذ).. إصدار أدبي يوثق القصص الفائزة في مسابقة الإمام العسكري (عليه السلام)

(نوافذ).. إصدار أدبي يوثق القصص الفائزة في مسابقة الإمام العسكري (عليه السلام)